# Meter readings with OpenCV

Although we have a very moderate energy consumption I sometimes feel like tracking the meter readings over time would give some insights about the development over the year. This topic is not of greatest importance, but a good reason to implement an API for IoT applications from scratch to learn something new. And since it's cumbersome to transfer the readings by hand, wouldn't it be nice to just take an image of the meter and have the numbers read automatically?

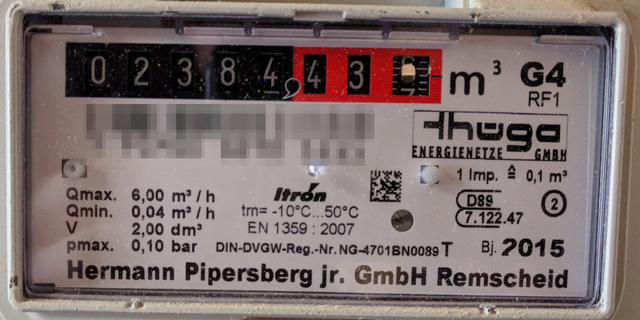

So I took some time to experiment how such a reading would be feasible. The meter looks like this:

# Trying off-the-shelf tools

# EAST, a text detector

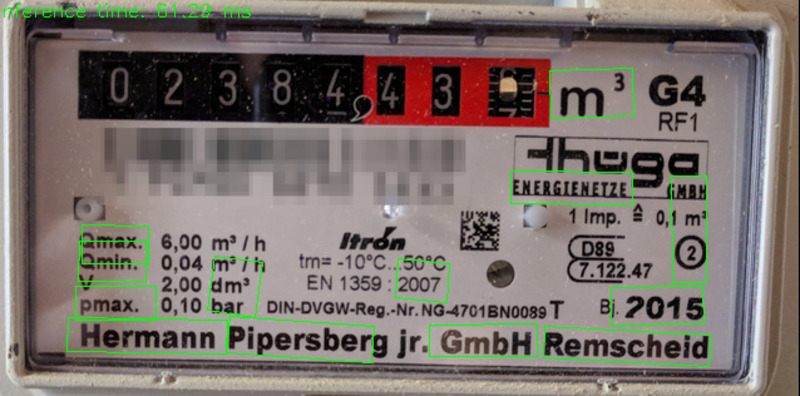

EAST stands for Efficient and Accurate Scene Text Detector. I did not have stumbled across this if there was no implementation in OpenCV. To give it a try, I did not even have to change the example. As it turns out, the detector detects a vast amount of text fragments in my sample image. However, it seems to have problems with scaling. So I had to tweak the image size parameters a bit to get detections in the various regions of the image, but not in a reliable way. The result image here is just one candidate, I had more luck with (parts of) the actual line of interest (02384), but nothing reproducible.

# Tesseract

Okay, consider the text detection to be solved for a moment. The plan was then to take the bounding boxes and apply Tesseract to actually read the value. I then wanted to check each box content with a regular expression or some such, but have been caught by reality when I fed the following snippet to tesseract. I had no luck to actually get a reading. So I skipped further exploration of this until I made progress in the ROI detection.

# A hand crafted approach

I'd like to proceed in two steps:

- Detect the region of interest, an axis aligned bounding box, with high robustness.

- Get a high quality image of only the digits and give

tesseractanother try.

For each step, I want to utilize the complete domain knowledge I have, in particular:

- The digits are framed by a black box,

- the reading consists of 5 digits,

- next to the digits is a red box.

Well, this calls for good old template matching as a fist shot? So here is my template. I filled the places with the digits with black, to get better results I would have to mask them out so that the parts with the digits are not scope of the matching. But this template is so descriptive for this task that I doubt there will be any problem.

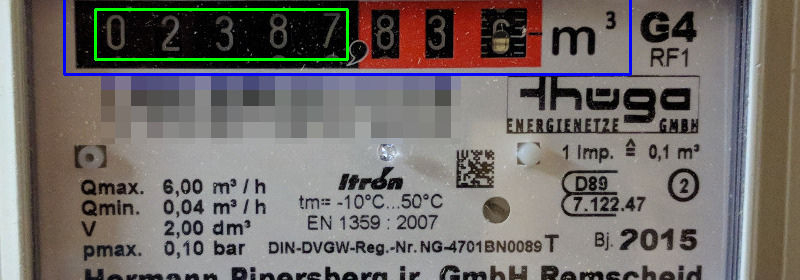

I got scale "invariant" (big air quotes) by transforming the source image several times before matching. And I tweaked the template a little bit to capture more of the context around, see the blue box here (which represents at the same time the result):

cv::Mat matched;

const double source_ratio = static_cast<double>(image.size[1]) / static_cast<double>(image.size[0]);

const size_t NUM_SCALES = 30;

cv::Mat best;

cv::Point best_min_loc;

double best_min = std::numeric_limits<double>::max();

for(size_t i = 0; i < NUM_SCALES; ++i) {

// resize image from 1.5 to 3.5 times the template width, evenly spaced

const double increment = 2.0 / NUM_SCALES;

const double width_factor = 1.5 + i * increment;

std::cout << "try: " << width_factor << std::endl;

const auto desired_width = static_cast<int>(0.5 + tmpl.size[1] * width_factor);

const auto desired_height = static_cast<int>(0.5 + desired_width / source_ratio);

cv::Mat resized_source;

cv::resize(image, resized_source, cv::Size(desired_width, desired_height));

cv::matchTemplate(resized_source, tmpl, matched, cv::TM_SQDIFF);

cv::Point min_loc, max_loc;

double min, max;

cv::minMaxLoc(matched, &min, &max, &min_loc, &max_loc);

if(min < best_min ) {

resized_source.copyTo(best);

best_min = min;

best_min_loc = min_loc;

std::cout << "found new best:" << resized_source.size << std::endl;

}

}

To transform the image and not the template has some advantages in this case: I can apply templates for the individual number and look up their locations by looking at the pixel positions in the old template, that's where the green box came from.

With a little bit of noise reduction (and morphologic operations), an image like this can be retrieved and I discovered some important settings for tesseract:

$ tesseract --psm 8 digits.jpg stdout digits

02387

The steps to remove the noise were (in my case):

- Apply adaptive threshold,

- erode & dilate with a rather small kernel,

- search for connected components (

cv::connectedComponentsWithStats) and - find the five (number of digits) biggest components, set the remainder to black.

Yay!

So long story short:

- Text detection with a pre-trained net may not be the best option if you are in some kind of controlled environment or need good detections within a single shot (no tracking or other probabilistic approach possible).

tesseractcan be configured to produce accurate results, but is very sensitive against noise etc. in the image.

# Further improvements

There are some things my approach is sensitive to, in particular:

- Image sensor and meter are not in a parallel plane. A remedy cound be to:

- Have four templates to the corners of the box (and tra to detect them),

- find feasible combinations of the locations,

- find a homography for each combination and unwarp the image to get a planar version,

- check this version against an undistorted template.

- Camera is rotated. An idea would be to

- apply edge detection and a Hough transform,

- determine the angle you would need to rotate the lines so that you have many lines around 0° or 90° (assuming that lines are either horizontal, vertical or distributed unoformly). This would be some sort of a principal axis transform.

- Did I already mention that I like simple solutions?

- Place tape on the meter masking the ROI.